—-

이탤릭 볼드 이탤릭볼드

Workflow stages

- Question or problem definition.

- Acquire training and testing data.

- Wrangle, prepare, cleanse the data.

- Analyze, identify patterns, and explore the data.

- Model, predict and solve the problem.

- Visualize, report, and present the problem solving steps and final solution.

- Supply or submit the results.

기본적으로 설치되어 있어야하는 패키지는 아래 코드 를 사용한다.

import cv2

import matplotlib.pyplot as plt

import numpy as np

import glob, os

import keras

from keras import layers

from keras.models import Model

from sklearn.utils import shuffle

from sklearn.model_selection import train_test_split

from imgaug import augmenters as iaa # 이미지를 변형하는 패키지

import random

Data 전처리

filename, _ = os.path.splitext(os.path.basename(img_path))

subject_id, etc = filename.split('__')

gender, lr, finger, _ = etc.split('_')

gender = 0 if gender == 'M' else 1

lr = 0 if lr =='Left' else 1

if finger == 'thumb':

finger = 0

elif finger == 'index':

finger = 1

elif finger == 'middle':

finger = 2

elif finger == 'ring':

finger = 3

elif finger == 'little':

finger = 4

return np.array([subject_id, gender, lr, finger], dtype=np.uint16)

def extract_label2(img_path):

filename, _ = os.path.splitext(os.path.basename(img_path))

subject_id, etc = filename.split('__')

gender, lr, finger, _, _ = etc.split('_')

gender = 0 if gender == 'M' else 1

lr = 0 if lr =='Left' else 1

if finger == 'thumb':

finger = 0

elif finger == 'index':

finger = 1

elif finger == 'middle':

finger = 2

elif finger == 'ring':

finger = 3

elif finger == 'little':

finger = 4

return np.array([subject_id, gender, lr, finger], dtype=np.uint16)

img_list = sorted(glob.glob('Real/*.BMP'))

print(len(img_list))

imgs = np.empty((len(img_list), 96, 96), dtype=np.uint8)

labels = np.empty((len(img_list), 4), dtype=np.uint16)

for i, img_path in enumerate(img_list):

img = cv2.imread(img_path, cv2.IMREAD_GRAYSCALE)

img = cv2.resize(img, (96, 96))

imgs[i] = img

# subject_id, gender, lr, finger

labels[i] = extract_label(img_path)

np.save('dataset/x_real.npy', imgs)

np.save('dataset/y_real.npy', labels)

plt.figure(figsize=(1, 1))

plt.title(labels[-1])

plt.imshow(imgs[-1], cmap='gray')

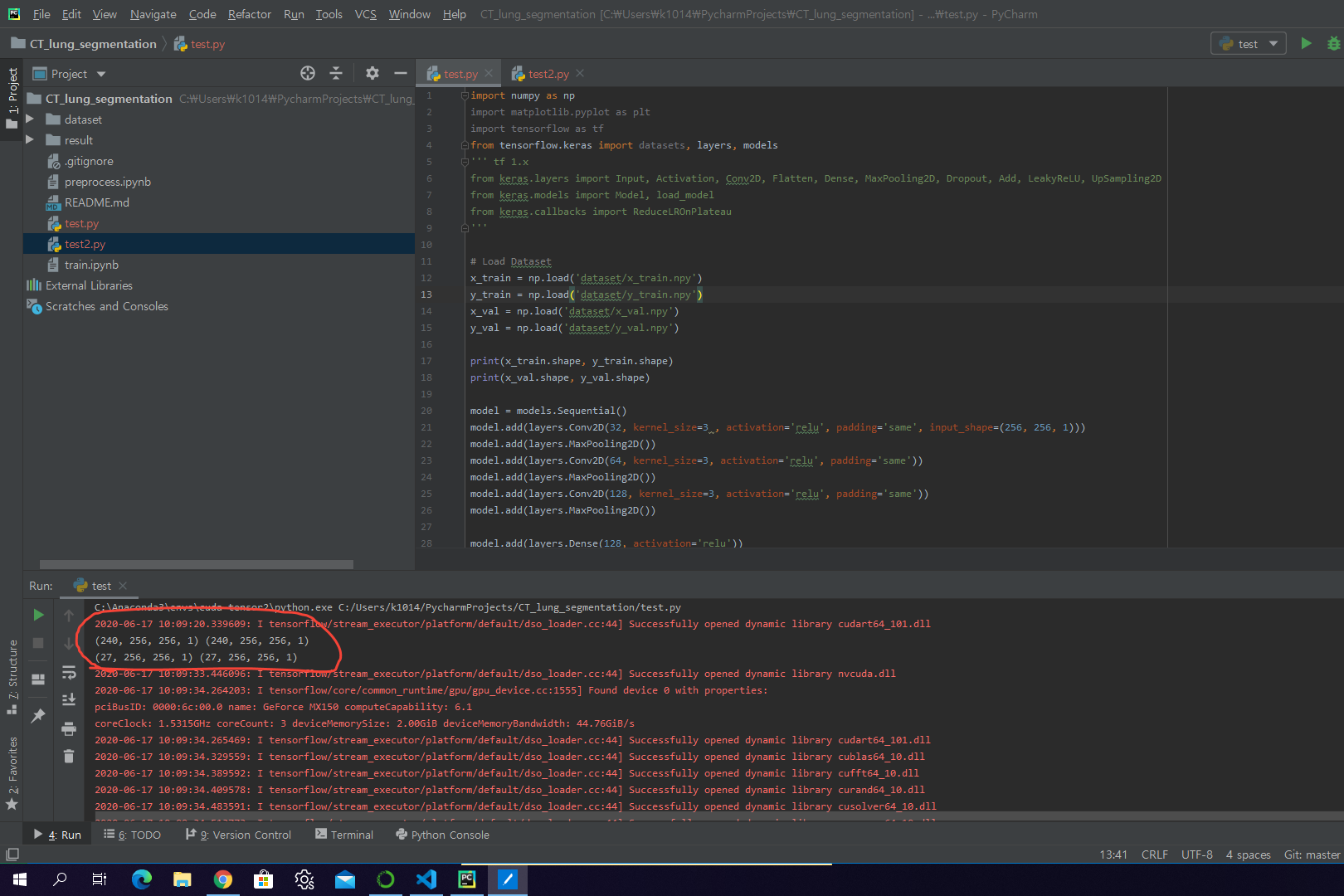

Load Dataset

Encoder 차원 축소를 통해 핵심요소 추출 (DownSampling, ex MaxPooling2D)

Decoder 압축된 정보로부터 차원 확장을 통해 원하는 정보로 복원 ( UpsSampling , Upsampling2D)

x_real = np.load('dataset/x_real.npz')['data']

y_real = np.load('dataset/y_real.npy')

x_easy = np.load('dataset/x_easy.npz')['data']

y_easy = np.load('dataset/y_easy.npy')

x_medium = np.load('dataset/x_medium.npz')['data']

y_medium = np.load('dataset/y_medium.npy')

x_hard = np.load('dataset/x_hard.npz')['data']

y_hard = np.load('dataset/y_hard.npy')

print(x_real.shape, y_real.shape)

plt.figure(figsize=(15, 10))

plt.subplot(1, 4, 1)

plt.title(y_real[0])

plt.imshow(x_real[0].squeeze(), cmap='gray')

plt.subplot(1, 4, 2)

plt.title(y_easy[0])

plt.imshow(x_easy[0].squeeze(), cmap='gray')

plt.subplot(1, 4, 3)

plt.title(y_medium[0])

plt.imshow(x_medium[0].squeeze(), cmap='gray')

plt.subplot(1, 4, 4)

plt.title(y_hard[0])

plt.imshow(x_hard[0].squeeze(), cmap='gray')

모델 사용